Flickr user Book Sprints, flickr.com/photos/129798087@N03/

Flickr user Book Sprints, flickr.com/photos/129798087@N03/If I ask you to picture “big data,” what do you think of?

Perhaps your mind goes to a company like Google, which crunches massive amounts of data to provide seemingly magical conveniences like voice recognition or turn-by-turn directions. Or maybe you think of a government agency, like the NSA, which sifts through the patterns of our phone calls and emails to gain uncanny insight into our daily lives.

You probably didn’t think first of a grantmaking foundation, social justice group, or humanitarian assistance organization. Compared to government agencies and big companies, key players in the social sector lag far behind in realizing the potential of data-intensive methods. Most of those leading today’s efforts to help the vulnerable, speak truth to power, and narrow gaps in opportunity are only just beginning to embrace new methods that are producing sweeping gains—and raising new risks—across the rest of society.

A few are trying to jump into the ring: During the Ebola crisis, for example, funders and NGOs pushed hard for the release of cell phone call data records—but they appeared to bypass meaningful reflection about the privacy implications or whether that type of data would actually help. Many groups in the U.S. are interested in critiquing pre-trial risk assessment tools—but they lack the quantitative know-how to perform the robust statistical analyses to determine whether these tools are discriminatory.

What’s at stake for civil society groups is not only their operational efficiency, but ultimately their effectiveness. In order to succeed in reshaping society, civil society groups must be able to responsibly use the most powerful tools at their disposal—and must also understand how public and private institutions use those powerful tools, and how decisions really get made.

What’s been most prominent in the social sector’s discussion of big data so far has been critique: highlighting various ways that new, data-intensive methods used by companies or governments may reinforce bias, make mistakes, violate personal autonomy, or otherwise perpetuate injustice. Social sector advocates’ natural skepticism, coupled with a lack of direct, hands-on experience using these new methods, creates the risk of a vicious circle, where the social sector’s input into key institutions may become less informed and less relevant over time.

To get to a better place, NGOs and the major foundations that support them need to develop a more concrete understanding of big data’s promise and challenges, so that awareness of big data’s risks no longer acts as a barrier to positive action.

This summer, the Ford and MacArthur foundations asked our team at Upturn to take a look at this challenge: What risks do new, large-scale methods of gathering and analyzing data pose for the work and priorities of large foundations, and what can be done to move forward powerfully in a way that addresses those risks? Data ethics was our topic—a deliberately broad term for potential harms to philanthropic beneficiaries and priorities that can arise in connection with data at scale. We were particularly interested in clarifying which, if any, hazards of these new technologies may not be well addressed by the existing grantmaking process.

Today we’re publishing the full report of our findings. It reflects conversations with 15 key players in the grantmaking enterprise at major foundations, together with an extensive interdisciplinary lit review. In the process, we confirmed that foundations are home to deep and varied expertise, and that a range of risk controls—including human subjects protections and best practices for data privacy—address many of the potential challenges with data intensive grants.

We found three basic challenges in data-oriented grantmaking that are not well addressed by the grantmaking process as it exists today.

- Some new data-intensive research projects involve meaningful risk to vulnerable populations, but are not covered by existing human subjects regimes, and lack a structured way to consider these risks. In the philanthropic and public sector, human subject review is not always required and program officers, researchers, and implementers do not yet have a shared standard by which to evaluate the ethical implications of using public or existing data, which is often exempt from human subjects review.

- Social sector projects often depend on data that reflects patterns of bias or discrimination against vulnerable groups, and face a challenge of how to avoid reinforcing these existing disparities. Automated decisions can absorb and sanitize bias from input data, and responsibly funding or evaluating statistical models in data-intensive projects increasingly demands advanced mathematical literacy which foundations lack.

- Both data and the capacity to analyze it are being concentrated in the private sector, which could marginalize academic and civil society actors. Some individuals and organizations have begun to call attention to these issues and create their own trainings, guidelines, and policies—but ad hoc solutions can accomplish only so much.

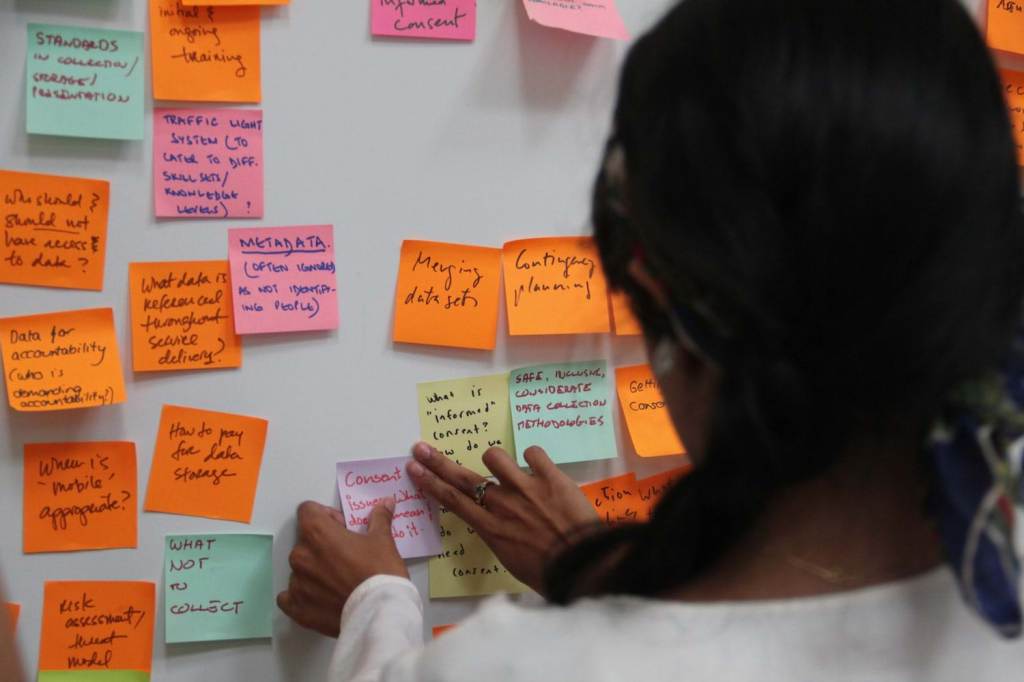

These challenges don’t have easy answers, so one of the most important things foundations can do is to invest in learning. That means offering training on data issues for program staff and grantees, including on data ethics issues. It means encouraging trial and error, exchange of experiences, and collection of lessons learned. And it means starting with a list of key questions that grantmakers and foundation leadership can ask to make sure that they’ve covered their bases. Those questions are:

- For a given project, what data should be collected, and who should have access to it?

- How can projects decide when more data will help—and when it won’t?

- How can grantmakers best manage the reputational risk of data-oriented projects that may be at a frontier of social acceptability?

- When concerns are recognized with respect to a data-intensive grant, how will those concerns get aired and addressed?

- How can funders and grantees gain the insight they need in order to critique other institutions’ use of data at scale?

- How can the social sector respond to the unique leverage and power that large technology companies are developing through their accumulation of data and data-related expertise?

- How should foundations and nonprofits handle their own data?

- How can foundations begin to make the needed long term investments in training and capacity?

Major foundations have a unique role to play in leveraging data at scale for social good, as well as in shaping norms around how this data is treated. We hope that the philanthropic community will engage in a thoughtful conversation about how to increase literacy around data use and data ethics, and will lead by example to advance awareness of these new opportunities and challenges across civil society.

This article originally appeared in Medium.

Photo credit: Book Spirits, Flickr/Creative Commons